This page is part of the final project by Nicoara Talpes for the EECS349 Machine Learning class taught by Professor Downey at Northwestern University. It pursues an idea whose author is Professor Downey.

Motivation

Large texts that are transcribed on electronic format by hand or using OCR techniques contain errors, or are incomplete. An example is the set of digital images on the EEBO website of early medieval works. The goal of this project is to come up with a novel way of coming up with corrections for the incompletely transcribed words. The research question to answer was how accurate can our corrector be?

Solution

The solution has two parts. First, a language model looks at the context of the incomplete word and the vocabulary of the corpus and comes up with a set of corrections for each word. We then compared the most probable corrections to a set of provided solutions and constructed our data set, composed of corrections. The four features of each instance are: the probability that it’s right, the probability difference to the next most probable correction, the number of other corrections, and the number of characters missing from the incomplete word. The second step was to use a classifier to calculate the accuracy of the training set, and active learning to show that corrections can be predicted better.

Testing and training

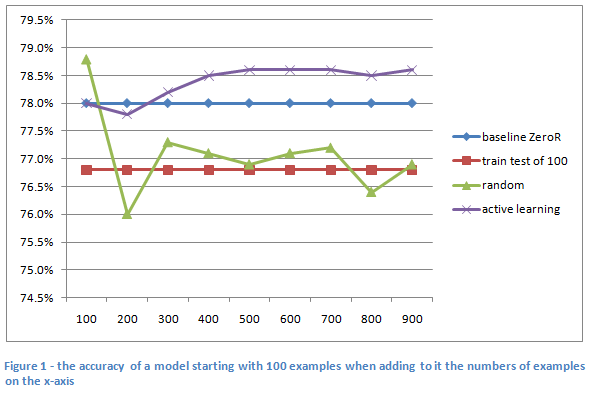

Over fitting was avoided by splitting the data set into a training set, a pool set, and a test set. Active learning examples are chosen from the pool set. I did not use cross validation, since it would not have helped. I compared the model’s accuracy nine times after adding every a hundred examples using active learning to the training set versus adding the same number of randomly selected examples to the training set. Using most machine learning algorithms, we do passive learning when we choose the training set randomly, and see how well it does. We measured success if using active learning got a better model than passive learning.

Results

The results show the model can accurately predict 78% of the time if a new correction is right or wrong. Comparing the results at the mentioned benchmarks proved the accuracy of a classifier trained on active learning is consistently better, confirming our expectations.

For more information, here is a more detailed report.